'Morning Run' (2022)

by Timothy “Ill Poetic” Gmeiner, Eito Murakami & Mingyong Cheng

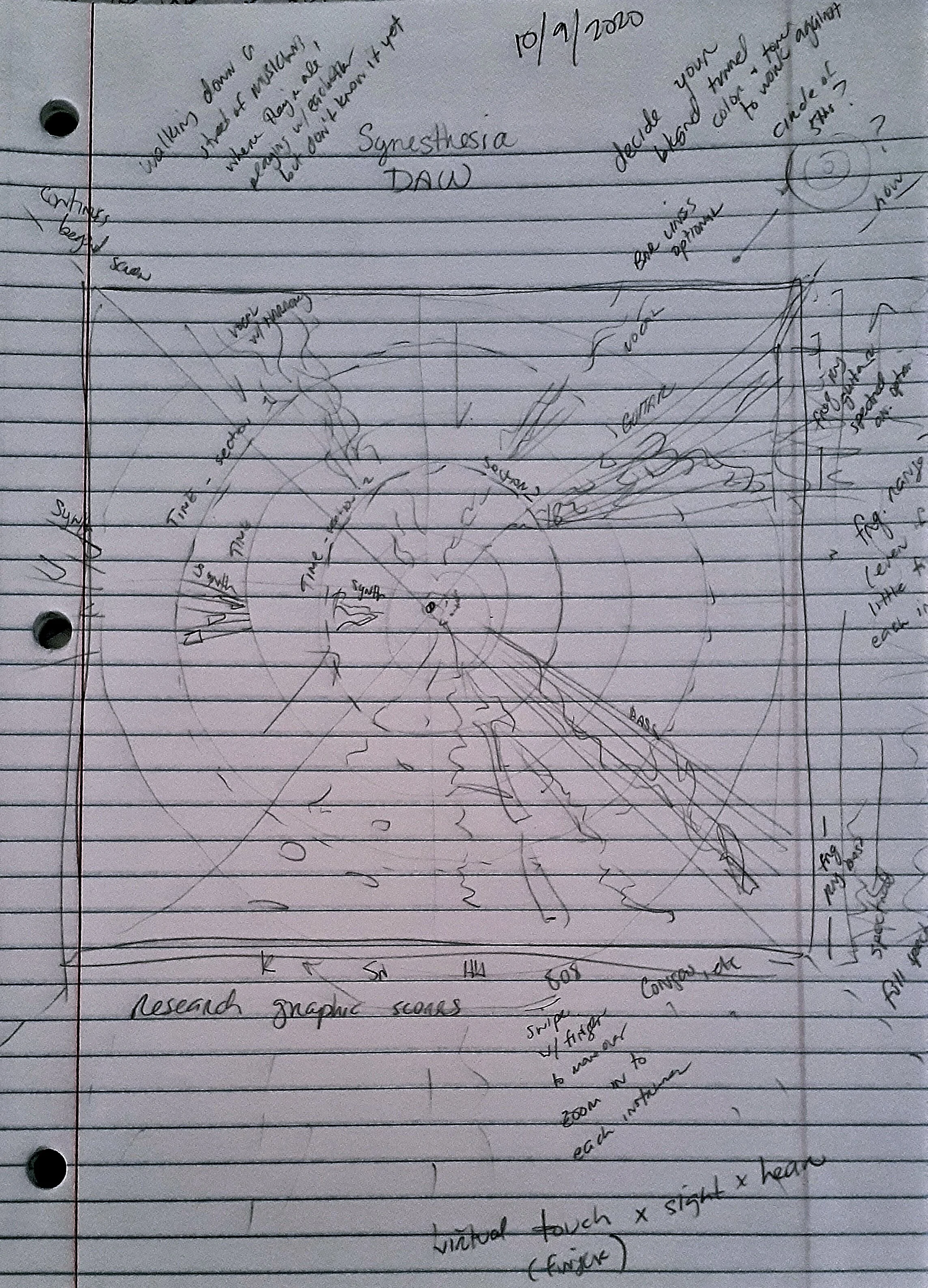

“Morning Run” is a song and accompanying graphic score inspired by my experience with sight & sound synesthesia. This project acts as proof-of-concept for 6-DOF AR/VR Digital Audio Workstation (DAW) development. Progress from the execution of various iterations of “Pigments of Imagination” as well as further studies in digital audio processing, AR/VR/MR/XR, programming and spatial audio are designed to prepare this idea for proper implementation.

Artist Statement / Relation to Theme: My synthesis as a career music producer entering the VR space has incited me to explore the visual, spatial and linear aspects of what it means to move through music in a virtual environment. “Morning Run” probes this fascination by bridging the VR experience of “Pigments of Imagination” (above) toward interactive AR/VR music experience and production. This allows the user to turn their environment into a digital audio workstation and create an art-music synthesis of color, shape and sound with their fingertips.

Logistics - Audio: This project captures field recordings of neighborhood sounds taken on various morning runs. Music was composed around the chopped and processed sounds to create a full composition.

Logistics - Graphic Score: A graphic score was then cultivated from the results of this composition. The images below are designed to simulate the multisensory convergence of synesthesia placed into a neighborhood environment from three perspective: left-to-right view, top view and first-person horizon view.

The left-to-right view (pictured above) shows the A and B sections of the composition with each block representing two measures. The pattern of percussion peppers the street, the bassline sits in the guard-rail and the Rhodes keys flesh off the guard-rail with additional chords sifting through the building. Sprinkles of loose keys and birds fill the sky.

The top view (pictured above) takes the colors of each block in the left-to-right view and paints them down streets I’ve run, acting as the overall form of the piece.

The first-person horizon view (pictured above) re-imagines music creation and production as a 3-dimensional street to walk down and create in. The graphic score views music not left-to-right, but as a series of ongoing sounds that exist past, present and future. In this example, measures of time are counted moving toward the horizon line, frequency is measured along the Y Axis. Each element of the song (kick, snare, keys, vocals, etc) streaks toward the center-point within its own sub-section. The colors are informed by the frequency of the instrument and are meant to interact loosely with the environment.

Current Status: Currently applying studies of sound-synthesis, acoustics and psychoacoustics toward fundamental signal processing in Pure Data. Recent projects include synthetically re-modeling the Winchester Bell, additive synthesis GUI, and re-creating vintage synthesizer patches. Research studies include analysis of compressor topology as well as various analog reverbs/delays and their digital plugin counterparts remodeled in the Pure Data environment. These projects and research contribute to fundamentals of AR/VR-sound-processing via user interactivity.

Future Plans: I speculate that “Morning Run” will develop first as an AR experience based around 360 video. A foundation of continued research in spatial audio, AR/VR/MR/XR, physics, and various subtopics in both digital audio processing and trigonometry will be necessary to implement a working model of this concept. I foresee this project initially modeled as a plugin for existing DAWs (i.e. Ableton) with potential to eventually launch into it’s own fully-formed DAW. Interactive VR music production does exist, but leading examples still view creation through a video-game lens rooted in modeling physical instruments. “Morning Run” looks to extend and more closely interact and add an artistic visual flair within the DAW world.

Core areas of study: interactive environments, embodiment, synesthesia, multisensory integration, spatial audio, computer programming (languages TBD), AR/VR/MR/XR, and various subtopics in DSP, calculus, physics, trigonometry.

Tools used: Ableton Live 10, Envelop Ambisonics, Waves Plugin Series, Alesis V49-key Keyboard / Controller, Adobe Photoshop, Adobe Illustrator, Tascam DR-05 Recorder

Relevant Classes: UCSD: MUS170, MUS172, MUS175, MUS206/267, VIS41, MUS2A, MUS174B, MUS267 // SDCCD: MUSC95